IBM researchers say they’ve solved a big piece of the quantum computing puzzle with a new system for protecting against errors that can crop up among quantum bits, or ‘qubits.’

The issue the team is addressing is similar to an error that can crop up among the bits storing data in traditional computing. Sometimes, a bit that ought to be a 0 turns up as a 1 (or vice-versa), resulting in inaccurate or broken data. To deal with this, an extra bit is added whose state indicates whether or not the other bits are all correct.

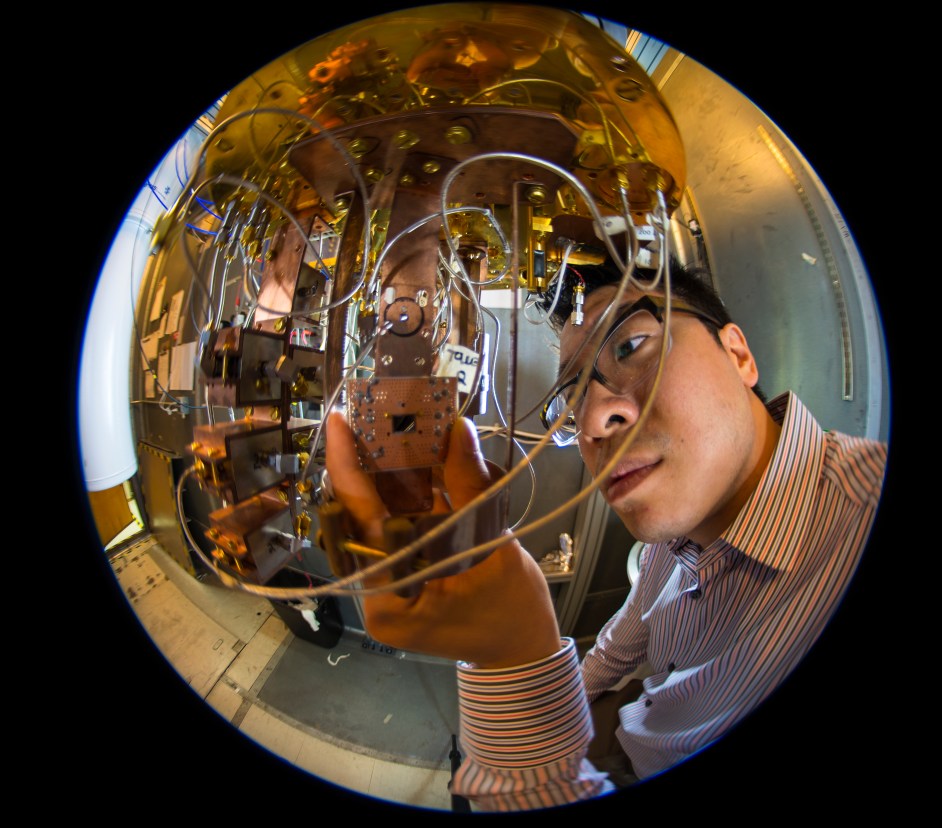

Jerry Chow, Manager of Experimental Quantum Computing at IBM Research, told TechCrunch his team is looking for those same bit-flip errors, but also something a bit gnarlier that’s unique to qubits. Given their quantum nature, qubits can be 0 or 1, but the “phase” of the relationship between 0 and 1 can change between negative and positive.

In the system designed at IBM Research, there are two qubits that hold data, and another two “checking” for errors — their state depends on whether or not a qubit has flipped to the wrong value or phase, respectively.

Chow says that this method can scale linearly with the number of qubits in a system, so larger lattices will need more of these measurement bits. But that number won’t grow exponentially with the capability of the system.

So why is handling errors so important for quantum computing? These errors emerge due to interference from factors like heat, electromagnetic radiation and material defects, which are all unfortunately common. If the results from simulations of the interaction of molecules are to be trusted, those using the data need to know there aren’t random errors throughout.

Next up from IBM’s Experimental Quantum Computing team is a similar lattice with 8 qubits. “Thirteen or 17 qubits is the next important milestone,” Chow said, because it’s at that point they’ll have the ability to start encoding logic into the qubits — which is when things start to get really interesting.

Comments

Post a Comment